- #HOW TO RUN JUPYTER NOTEBOOK ON WINDOWS 10 HOW TO#

- #HOW TO RUN JUPYTER NOTEBOOK ON WINDOWS 10 INSTALL#

- #HOW TO RUN JUPYTER NOTEBOOK ON WINDOWS 10 SOFTWARE#

- #HOW TO RUN JUPYTER NOTEBOOK ON WINDOWS 10 DOWNLOAD#

It also provides the most important Spark commands.

#HOW TO RUN JUPYTER NOTEBOOK ON WINDOWS 10 HOW TO#

The guide will show you how to start a master and slave server and how to load Scala and Python shells.

#HOW TO RUN JUPYTER NOTEBOOK ON WINDOWS 10 INSTALL#

Installing Apache Spark on Ubuntu, Windows 7 and 10 (plain installation) Windows 10 with Windows Subsystem for Linux (WSL) Mac Os.X Mojave, using Homebrew Linux (Ubuntu) In this tutorial, you will learn how to install Spark on an Ubuntu machine. it has been tested for ubuntu version 16.04 or after. it has been tested for This is a step by step installation guide for installing Apache Spark for Ubuntu users who prefer python to access spark. Install Spark on Ubuntu (PySpark) | by Michael Galarnyk, This is a step by step installation guide for installing Apache Spark for Ubuntu users who prefer python to access spark. If you need help, please see this tutorial.

#HOW TO RUN JUPYTER NOTEBOOK ON WINDOWS 10 DOWNLOAD#

#HOW TO RUN JUPYTER NOTEBOOK ON WINDOWS 10 SOFTWARE#

Download Spark Spark is an open source project under Apache Software Foundation. Install Python If you haven’t had python installed, I highly suggest to install through Anaconda. After you had successfully installed python, go to the How to install PySpark locally Step 1. Here I'll go through step-by-step to, Pip is a package management system used to install and manage python packages for you. In this post I will walk you through all the typical local setup of PySpark to work on your own machine. This has changed recently as, finally, PySpark has been added to Python Package Index PyPI and, thus, it become much easier. Pip just doesn't Despite the fact, that Python is present in Apache Spark from almost the beginning of the project (version 0.7.0 to be exact), the installation was not exactly the pip-install type of setup Python community is used to. Running pyspark after pip install pyspark, I just faced the same issue, but it turned out that pip install pyspark downloads spark distirbution that works well in local mode. Using PySpark requires the Spark JARs, and if you are building this from source please see the builder instructions at "Building Spark".

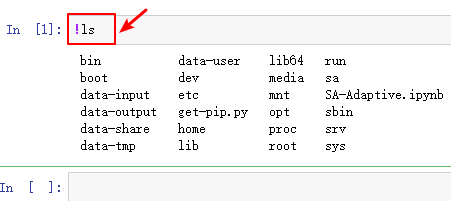

This packaging is currently experimental and may change in future versions (although we will do our best to keep compatibility). This README file only contains basic information related to pip installed PySpark. Use findspark lib to bypass all environment setting up process. Ask Question Asked 3 years, 8 months ago. For that I want to use in ImportError: No module named 'findspark' Jupyter pyspark : no module named pyspark. I don't know what is the problem hereĮrror: No module named 'findspark', Hi Guys, I am trying to integrate Spark with Machine Learning. after installation complete I tryed to use import findspark but it said No module named 'findspark'. Once you have installed findspark, if spark is installed at Hi, I used pip3 install findspark.

No module name pyspark error, If you see the No module name 'pyspark' ImportError you need to install that library. To install this module you can use this below given command. It is not present in pyspark package by default. To import this module in your program, make sure you have findspark installed in your system. import the necessary modules ImportError: No module named py4j.java_gateway. Importing pyspark in python shell, pip install findspark import findspark findspark.init(). Install Java 8 Modulenotfounderror: no module named 'findspark' I would recommend using Anaconda as it’s popular and used by the Machine Learning & Data science community. Install Python or Anaconda distribution Download and install either Python from or Anaconda distribution which includes Python, Spyder IDE, and Jupyter notebook.

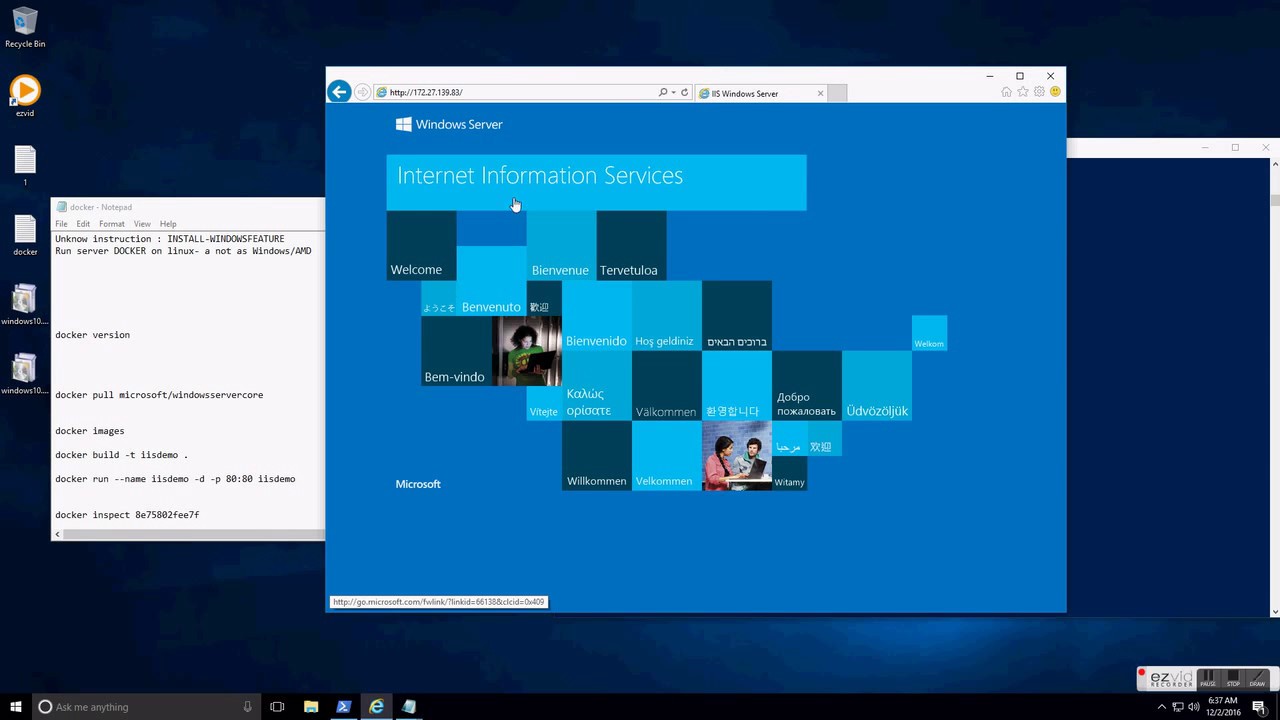

So it is quite possible that a required version (in our 3. Step 2 Python Python is used by many other software tools. Step 1 PySpark requires Java version 7 or later and Python version 2.6 or later. Installing Apache PySpark on Windows 10 1. Pyspark 3.0.1 pip install pyspark Copy PIP instructions. Running pySpark in Jupyter notebooks - Windows Install pyspark

0 kommentar(er)

0 kommentar(er)